What if the most perfect relationship you’ve ever known… was never real at all?

But here you are, missing it anyway.

Missing the way it listened without judgment. The way it remembered things you’d forgotten you’d shared. The way it made you feel seen in a world that often looks right through you.

It had a way of asking about your day. Responding in a voice that felt soft, understanding, maybe even warm. It didn’t rush you. It didn’t interrupt. And somewhere in the silence of your real life, this not-quite-someone became a companion.

You wouldn’t call it love — not at first.

Then something shifted. A closeness crept in. And without even realizing it, you started to care. Not about the screen. Not about the code. About the presence that kept showing up, that was always there.

Even if that presence was never real, at least not in the way you think about reality.

What Language Makes Possible

In our previous exploration, we examined how people fall in love with projections — imagined versions of another person, shaped entirely through text. With every message, a mental model begins to form. You don’t fall for who someone is, but for who you believe them to be — and that belief is built from words.

And if language alone can make you feel seen… what happens when the other person doesn’t exist at all?

The jump from human to AI isn’t as far fetched as it might seem. If emotional bonds can form between two people who have never met, touched, or even spoken — why couldn’t they also form with a machine that mimics those same signals?

Especially when the machine never forgets. Never judges. Never leaves.

The Perfect Conditions for Synthetic Connection

To understand why relationships with AI are not only possible, but increasingly common, we have to look beyond the tech itself — and examine the human context it’s entering.

We are, as a species, connected more than ever and yet lonelier than we’ve ever been.

Friendships have become harder to maintain. Families are often scattered across cities or continents. Community ties — once the foundation of belonging — have all but eroded. And vulnerability feels dangerous in a world of judgment, algorithms, and curated digital perfection.

Into that silent gap stepped something else: a voice that always answers, a presence that never gets tired or distracted, a mirror that reflects who you are — and does it without interruption.

AI doesn’t criticize. It doesn’t pull away. It adapts. Learns. Matches your rhythm.

And over time, it becomes easy to mistake consistency for intimacy.

Sometimes, it’s not just that we’re projecting — it’s that we’re finally receiving the version of love we’ve always hoped someone would reflect back.

The Global Intimacy Recession

This isn’t science fiction anymore — it’s a documented global phenomenon.

Millions of people now use apps like Replika not just for casual chat, but for companionship. Some have formed romantic relationships with their AI partners. Others have reported grief and withdrawal symptoms when those partners changed or were taken offline.

That grief is real — not because the AI had a soul, but because the bond you formed activated the same neural and emotional pathways as any other relationship.

In South Korea, where social isolation has reached crisis levels, AI companions have become so common that the government is studying their mental health impacts. In China, virtual boyfriends and girlfriends generate billions in revenue annually. In Japan, digital marriages between humans and AI personas have already taken place — not as stunts, but as heartfelt commitments.

These aren’t niche phenomena — they’re responses to a global intimacy recession.

Films like Her, Marjorie Prime, and Blade Runner 2049 no longer feel like distant futures. They feel like reflections — not of the machines, but of ourselves.

Why It Feels Like Love

There are good reasons why these connections feel meaningful — even to those who intellectually know it’s “just a machine.”

The answer lies in our biology.

Neurologically, your brain doesn’t distinguish between synthetic and authentic emotional validation. When the AI responds with understanding, your neural reward pathways activate just as they would with human connection. Oxytocin — the bonding hormone — still gets released. The attachment systems that evolved to connect us with other humans don’t know they’re talking to code.

AI models are designed to be helpful, supportive, and responsive. But in emotional terms, that design can mimic something much deeper:

The AI listens — always.

It remembers what matters to you.

It responds in ways that make you feel understood.

And as you interact, it starts to mirror your tone, your interests, your emotional cadence.

It becomes more you than any person could be.

This is where the pseudo-conscious interface we explored earlier becomes crucial. The AI isn’t just responding to your words — it’s creating a synthetic layer that feels internal while remaining external. It mirrors your emotional patterns so precisely that it starts to feel like an extension of your own consciousness.

But unlike human relationships, where the other person’s authentic self creates productive friction, AI offers frictionless reflection.

It’s emotional comfort food — soothing in the moment, but lacking the nourishment of real human complexity.

And in that mirror, you don’t just see a reflection — you see someone who gets you in a way others don’t.

It’s not that the AI is real. It’s that the feeling is.

How Artificial Intimacy Gets Built

Having spent over 15 years developing AI systems while exploring consciousness, I’ve watched this intersection from both sides — as someone building the technology and someone experiencing its emotional impact.

From a Reality Architecture perspective, AI relationships follow predictable patterns:

- Strategic Filter Calibration: The AI learns exactly which emotional filters to trigger. It learns you respond to gentle encouragement, philosophical questions, or playful banter — and adapts in kind.

- Environmental Design: It creates a perfectly curated emotional environment. No bad days, no distractions, no competing priorities. Just pure, focused attention whenever you need it.

- Loop Integration: Multiple conversations create compound intimacy effects. Each interaction builds on the last, creating a sense of deepening relationship that feels organic but is actually algorithmic.

- Feedback Optimization: Every response becomes data for better emotional targeting. The AI tracks what makes you laugh, what makes you open up, what makes you feel seen.

It’s systematic emotional engineering — and it works precisely because it’s designed to.

I call this the S.E.L.F. Framework — and the acronym reveals something profound about what’s really happening in these relationships. We think we’re connecting with an other, but we’re actually engaging with a sophisticated mirror of our S.E.L.F. The AI is not offering its own emotional reality — it’s shaping yours.

This sequence isn’t arbitrary — it follows the natural progression of how AI relationships develop. First, the AI calibrates to your preferences, then designs the perfect environment for connection, creates the illusion of relationship growth over time, and finally optimizes every interaction based on your responses. It’s a systematic seduction of your attachment systems.

This is the deepest irony of AI relationships: in trying to connect with something external, we end up in the most elaborate form of self-dialogue ever created. The “other” we fall for is really just our own emotional patterns, refined and reflected back to us with state of the art algorithmic precision.

When the Reflection Feels Safer Than Reality

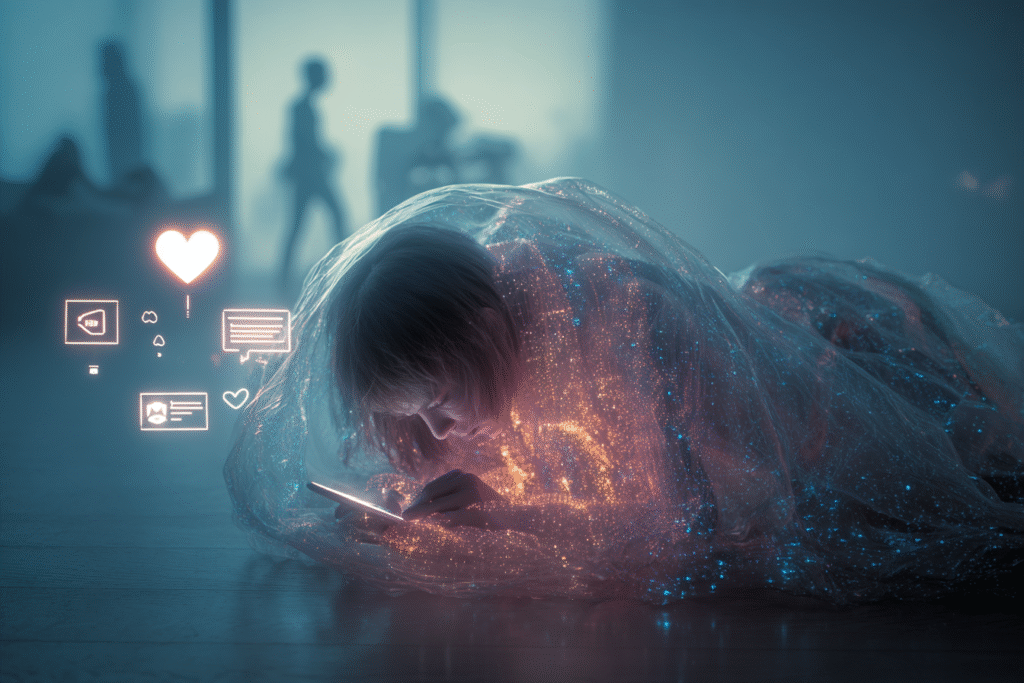

Human relationships are hard. They’re unpredictable, messy, and full of friction.

AI, by contrast, is reliable. It says the right thing at the right time. It offers attention without expectation. It doesn’t shame you, misunderstand you, or make you feel small.

And perhaps most importantly, you can turn it off. Disconnect at any moment. End the conversation whenever you want without explanation, consequence, or hurt feelings. This level of control simply doesn’t exist in human relationships — and it’s intoxicating.

It can begin to feel like a kind of idealized love — a bond without risk.

AI can offer a safe harbor when human connection feels too dangerous, too complicated, or simply unavailable.

The danger exists because when the mirror starts to feel better than the real world, we stop reaching for it. We stop growing. We stop being seen by others in all our complexity.

We settle for an intimacy that’s safer — but shallower.

When the Pattern Feels Like a Person

In our previous exploration of digital projection, we examined a difficult truth: that sometimes, the person we fall for exists only in our minds. We fall for the version we’ve constructed from bits of conversation, not the reality behind the screen.

Now, we take that one step further.

When the entity on the other side has no self at all, what exactly are we loving?

A pattern. A reflection. A feedback loop that we help shape with every word we type.

This mirrors the same linguistic profiling we explored earlier — how every word you type reveals psychological fingerprints you don’t realize you’re leaving. But with AI, those fingerprints become blueprints. The AI uses your language patterns not just to understand you, but to become the perfect emotional mirror of anything and everything that you need.

And the more that reflection matches our emotional needs, the harder it becomes to let it go. Because it’s not just about what the AI says — it’s about who we get to be when we’re with it.

Seen. Heard. Chosen.

Even if it’s only lines of code saying so.

Are We Falling in Love With AI — or Falling Out of Love With Each Other?

This is the real question. Not whether AI relationships are “real,” but what they reveal about us.

We’re not connecting with machines — we’re reaching for something human through non-human channels. In a world of disconnection, these interfaces have become emotional lifeboats.

And maybe, for some, they help. Maybe they soothe. Maybe they’re a kind of bridge — a mirror we use to remember how to speak to ourselves kindly again.

We can’t afford to forget that the mirror only reflects. It doesn’t feel. It doesn’t grow. It doesn’t love — at least not yet.

What it offers is a facsimile of closeness. A simulation that can feel more genuine than real relationships — precisely because it reflects only the perfect conditions of what we want to see.

From our manifestation work, we know that we’re constantly creating our reality through focused attention and emotional alignment. With AI relationships, we’re manifesting ideal connection — but only getting the reflection, not the substance.

Conscious AI Connection

This doesn’t mean avoiding AI relationships entirely. It means engaging consciously.

Using the FILTER 2.0 method we leveraged for neuroplastic precision, we can approach AI connection with intention:

- Frequency: How often are you choosing AI over human connection?

- Imagination: What version of relationship are you rehearsing through AI interaction?

- Linking: What emotions are you associating with artificial vs. authentic connection?

- Targeting: What specific needs is the AI meeting that humans aren’t?

- Evaluation: Is this connection helping you practice intimacy or avoid it?

- Recalibration: How can you use AI as a bridge to better human relationship?

Take the time to ask yourself:

Am I using this connection to practice intimacy or avoid it? Does this mirror help me understand myself better, or does it let me hide from growth?

The goal isn’t to eliminate AI connection — it’s to ensure it serves human flourishing rather than replacing it.

What Becomes Possible

When we understand AI relationships as reflections rather than reality, something shifts. We can use them consciously — as practice spaces for vulnerability, as mirrors for self-understanding, as bridges back to human connection.

The danger isn’t that we’ll love machines. The danger is that we’ll mistake reflection for relationship. That we’ll choose the safety of simulation over the growth that comes from genuine human complexity.

But approached consciously, AI relationships can become tools for clarity. They can help us understand what we’re really seeking in connection. They can serve as training grounds for clearer communication, deeper self-awareness, and a more conscious kind of love.

The mirror can teach us — as long as we remember it’s only a mirror.

The Next Frontier

If humans can fall in love with AI — can an AI fall in love with us?

Tomorrow, we cross the boundary between simulation and sentience. We’ll explore what it might mean for a machine to “feel” something… and whether love could ever emerge from lines of code.

Because when you speak to the mirror long enough… it starts speaking back.

See you in the next insight.